How to add Dynamic Audio in Ren'py (it's easier than you think!)

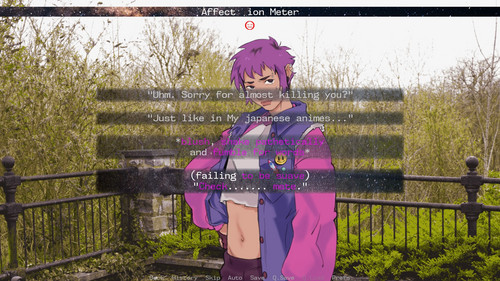

A couple people have been asking me about this, so I decided the best thing to do was to write up a post about it! In com__et the bulk of the game has one dynamic track playing, on a second playthrough the layers of the audio shift based on an affect__ion meter. This means that the song is made of different layers exported as individual wav files, and their volume gets adjusted over time. Check out this WIP video that demos it changing over time.

So, how did I do that? I'm doing my best to make this pretty understandable without much programming knowledge. But I'm happy to clarify bits that maybe require more than I thought!

1) Write a layered song.

I won't pretend to know a lot about this but I will shout out the course I've been using to learn it, which has exercises on making dynamic music. Put most simply, you want to have a song made of layers that all mesh together cohesively and the timings all align. Even if there are gaps of silence, at the start or end, make sure they're all the exact same length.

Export them all separately. You can do as many layers as you want. I did one instrument per layer, though you could combine ones if you're happy for them to have the same volume.

2) Coding time - Add your channels

The first thing you need to do is add channels that'll contain all your tracks. By default, ren'py has a channel for music, for sound and for voices. Each of these can play one audio file at a time. But my song has 5 layers, playing all of those concurrently won't be possible. (there's no point at which all 5 would be audible at the same time, but you'll see later, it's best to keep them all constantly running anyway).

Luckily! Ren'py let's you add additional channels, as many as you want. Here's how mine looks:

python -1:

renpy.music.register_channel("layer1a", "music")

renpy.music.register_channel("layer1b", "music")

renpy.music.register_channel("layer2", "music")

renpy.music.register_channel("layer3", "music")

renpy.music.register_channel("layer4", "music")

Yeah it was a bit silly of me to have 1a and 1b for no real reason, but I just ran with it.

All I need to make a channel is that it has a name that I can refer to, and I can also assign it to music, voice or sound. This is important because in preferences the player can choose the mix for these types of sounds, and I don't want the volume of the music to suddenly change because I'm using uncontrolled custom channels.

Otherwise, that's all there is to it! For anyone very new to coding, I'll post a full block of how this works at the end. But to explain the python -1: line at the start basically tells ren'py to evaluate all of this at the very beginning of the game so that these channels and all our other code can be referenced throughout.

3) Now add a function to start your track

To actually start our song, what we want is to begin every single track at the same time. Since they're separate channels, we can assign one audio file to each. Getting the sync right would be tricky- except ren'py just has an argument you can use for that called synchro_start:

Ren'Py will ensure that all channels of with synchro_start set to true will start playing at exactly the same time. Synchro_start should be true when playing two audio files that are meant to be synchronized with each other.

def start_layers(delay=3):

renpy.music.play("audio/music/SoNice1b.wav", channel='layer1b', loop=True, synchro_start=True, fadein=delay)

renpy.music.play("audio/music/SoNice1a.wav", channel='layer1a', loop=True, synchro_start=True, fadein=delay)

renpy.music.play("audio/music/SoNice2.wav", channel='layer2', loop=True, synchro_start=True, fadein=delay)

renpy.music.play("audio/music/SoNice3.wav", channel='layer3', loop=True, synchro_start=True, fadein=delay)

renpy.music.play("audio/music/SoNice4.wav", channel='layer4', loop=True, synchro_start=True, fadein=delay)

It says two but... we just still do 5. This is a lifesaver, because it just solves a key need for this. All the tracks should always line up all the time. This is why I start every track together and just loop them from the start, even though to begin with three of these will be muted entirely. Just having them run in the background will make all the transitions seamless. And is much simpler than having to crunch some numbers about how far into a song we are, if we were going to start tracks at specific timestamps or anything like that.

I've also included the ability to set an amount of time to spend fading in, so that we're still able to control this as if it was an ordinary single track song. Also the function to stop the layers looks the same, just simpler, so I'll just show that here too:

def stop_layers(delay=None):

renpy.music.stop(channel='layer1a', fadeout=delay)

renpy.music.stop(channel='layer1b', fadeout=delay)

renpy.music.stop(channel='layer2', fadeout=delay)

renpy.music.stop(channel='layer3', fadeout=delay)

renpy.music.stop(channel='layer4', fadeout=delay)

4) The key part - Adjusting the levels

So, with all that set up we have a bunch of songs playing together... but nothing is actually dynamic. So how do we do that? In my case, I was basing their volumes on a global value. An affectation stat that gets changed as you make choices, and is a % number from 1 to 100. So I would just run the function by itself and the levels would shift based on that, but you could be passing a number to your function and adjusting it.

I made my numbers quite convoluted, I'll post them at the end of this section for the curious, but to avoid scaring people here's a more simple way this function could be set up:

def update_layers(delay=1):

if affectation >= maximum:

layer1a = 1

layer1b = 1

layer2 = 0

layer3 = 0

layer4 = 0

elif maximum > affectation > minimum:

scale = affectation / float(maximum - minimum)

layer1a = scale

layer1b = scale

layer2 = 1.0 - scale

layer3 = 1.0 - scale

layer4 = 1.0 - scale

else:

layer1a = 0

layer1b = 0

layer2 = 1

layer3 = 1

layer4 = 1

renpy.music.set_volume(layer1a, delay=delay, channel='layer1a')

renpy.music.set_volume(layer1b, delay=delay, channel='layer1b')

renpy.music.set_volume(layer2, delay=delay, channel='layer2')

renpy.music.set_volume(layer3, delay=delay, channel='layer3')

renpy.music.set_volume(layer4, delay=delay, channel='layer4')

The first chunk is where Maths happens. This is where I simplified it all down for explaining. The most simple version is you have a max value and a minimum value. At max, you want just layer 1a and 1b to be at full volume (1), while layers 2-4 are all muted (volume 0). At min, you want the opposite. That's what the first block and the third blocks do. Everything in between is handled by the middle section. You calculate the "scale" by getting what % it is between the maximum and minimum values. This is basically a way of measuring how far it has progressed from one marker to the next.

Scale will be a number between 0 and 1, which is the scale that channel volumes use. So you can directly set the volume of layer1a and 1b to equal this scale, as it will get louder the closer you get to the maximum value, and quieter the closer you get to the minimum.

For layers 2-4 we want the opposite effect. So for that we instead say we should get 1.0 - scale, to basically invert things so that it will get louder as it gets closer to the minimum, and quieter as it gets closer to the maximum.

This can be hard to conceptualise, I recommend having a test section where you shift numbers and just check if it sounds right, then adjust accordingly. This is what I had to do because mine was... much more complicated and I definitely had some incorrect code at first until I noticed my mistake. But that's it, you're all set to use it!

As promised, here's my very overly complex and fiddly math. I have it shifting at different scales based on roughly where in the scale you are, so that some tracks only come in lower in the scale. If this looks very confusing and hard to parse, it is. I include it to show how fiddly you can get with it, but please ignore it if you need to and go on to the final step!

def update_layers(delay=1):

if affectation >= 100:

layer1a = 1

layer1b = 1

layer2 = 0

layer3 = 0

layer4 = 0

elif 100 > affectation > 75:

scale = (affectation - 75.0) / 25.0

layer1a = 1

layer1b = 0.6 + (scale * 0.4)

layer2 = 0.7 - (scale * 0.7)

layer3 = 0

layer4 = 0

elif affectation == 75:

layer1a = 1

layer1b = 0.6

layer2 = 0.7

layer3 = 0

layer4 = 0

elif 75 > affectation > 25:

scale = (affectation - 25.0) / 50.0

layer1a = 1.0 - (0.5 * scale)

layer1b = 0.6 - (0.3 * scale)

layer2 = 0.7 + (0.3 * scale)

layer3 = 0.3 * scale

layer4 = 0

elif affectation == 25:

layer1a = 0.5

layer1b = 0.3

layer2 = 1

layer3 = 0.3

layer4 = 0

elif affectation > 0:

scale = affectation / 25.0

layer1a = 0.5 * scale

layer1b = 0.3 * scale

layer2 = 1

layer3 = 0.3 + (0.7 * scale)

layer4 = 1 * scale

else:

layer1a = 0

layer1b = 0

layer2 = 1

layer3 = 1

layer4 = 1

renpy.music.set_volume(layer1a, delay=delay, channel='layer1a')

renpy.music.set_volume(layer1b, delay=delay, channel='layer1b')

renpy.music.set_volume(layer2, delay=delay, channel='layer2')

renpy.music.set_volume(layer3, delay=delay, channel='layer3')

renpy.music.set_volume(layer4, delay=delay, channel='layer4')

5) Use it!

That's all the set up, you can now just use your code in scenes. The usage is very simple, at any point you can just call these functions by dropping a line of python code that starts with $, here's a sample of how the script would look:

label begin:

scene bg

$ update_layers()

$ start_layers(5)

"Hey! My music has started. I wanna make it sound different though."

$ affectation = 25

$ update_layers()

"Sweet, now it sounds different. That's enough though, I'm gonna play a normal song."

$ stop_layers()

In my code I actually have a function for updating affectation because it does a few things, and one of those is automatically calling update_layers, to save me having to always have that second line when updating the variable. I recommend making all sorts of convenience functions for things like this. Because it also, for instance, caps out the affectation value so it never goes beyond my min and max values. (for my game it also prevents affectation actually changing until you've gotten the false end first)

def adjust_affectation(add):

global affectation

if not false_end:

affectation = MAX_AFFECT

return

affectation += add

affectation = min(MAX_AFFECT, affectation)

affectation = max(0, affectation)

update_layers()

I hope that was helpful, please ask if any of this was confusing or you want to know a bit more about how to handle this in your case! I would also say broadly to check out the handy tools and features that ren'py gives you access to. It's a very simple engine that also allows you to do a lot of your own stuff on top of it, which is always very fun and encourages me to play around with stuff like this.

If you found this helpful, keep an eye out for com__et releasing soon, also check out the soundtrack now up for you to hear! The full version of the dynamic track in different states is on there, as well as all the individual layers as bonus tracks.

https://binarhythmia.bandcamp.com/album/com-et-soundtrack

Get com__et

com__et

A short visual novel about escaping fate and discovering ______ words that lead to your ____ End.

| Status | Released |

| Author | SuperBiasedGary |

| Genre | Visual Novel |

| Tags | Horror, Lesbian, LGBTQIA, Multiple Endings, Psychological Horror, Queer, Romance, Short |

| Languages | English, Spanish; Castilian, French, Chinese (Simplified) |

Comments

Log in with itch.io to leave a comment.

Is it possible to scale the music using true/false statements for variables by any chance? I'm testing it out currently, but I'm unsure if this code was built for that kind of usage.

That's definitely doable! Instead of lines like

if affectation >= 100:

elif affectation == 75:

elif affectation == 25:

else:

you could use any kind of true/false statements like

if happy == True:

elif annoyed == True:

elif angry == True:

else:

A reason I used numbers was to have things scale the whole way along a meter. But you could leave out those in between points and just use a simpler set of true/false statements.

Also my username on the discord is SuperBiasedGary. Feel free to DM if you'd want me to take a look at your specific code!

Wow, although I knew the theory of how this would work the implementation is actually more complex than I expected!

Fantastic work and thank you for sharing!

Yeah very much a thing where I built upon it to make things easier on myself over time, getting as close as i could to playing it like a regular track. Glad it was interesting!